Using EKS to Deploy all the Pull Requests

By Tim Macfarlane on September 06, 2019 • 19 min read

This is a pretty technical post! My goal here is to document some of the lesser known aspects of setting up EKS. It’s by no means an introduction to Kubernetes or AWS concepts so I’m presuming some prior experience and knowledge.

Deploying all the pull requests

We recently built a system that deployed a brand new, isolated environment for each and every pull request submitted to one of our larger project’s repositories. Heroku calls these Review Apps. I talk about the advantages of deploying review apps here. The TLDR is that before we had review apps we were releasing every week or two, after we had review apps we were releasing every day or two. It’s all about where you do your manual exploratory testing. Do you do it on the pull-request? Or once your code is in master? It can make a big difference to how often you can release.

Since we were already running our production and test environments in AWS, and since Kubernetes seemed to be a good environment to deploy and run an arbitrary number of different applications, EKS made a lot of sense. EKS is AWS’s manage Kubernetes cluster product.

How does this thing work?

Like many applications, ours is made up of several microservices and third party dependencies such as databases. This is primarily a manual test environment so we throw in a few extra diagnostic tools such as redis-commander to look inside our redis DBs, maildev to see what emails are being sent by the system and to whom. Because we have several services, it’s not possible to use Heroku’s Review Apps which really only work for single service applications, and, more to the point, with our production system deployed on AWS, it was a good idea to stay on AWS for our test environments.

Each review app has a domain name that includes the PR number and the name of the service. We use a convention such as $SERVICE-pr-$PR_NUMBER.example.com, so we’d have domain names such as web-pr-1234.example.com and api-pr-1234.example.com for the “web” and “api” services respectively, on the pull request with number 1234.

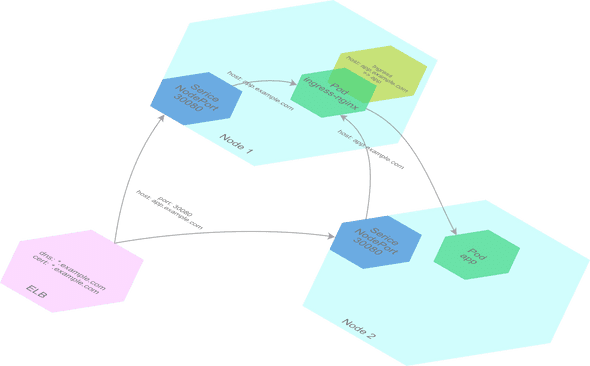

We have a wildcard DNS *.example.com pointing at a single load balancer (ELB), which also has a wildcard certificate for the same domain, *.example.com. This means all traffic going to those different domains go to the same load balancer. Inside the cluster, we use what is known as an ingress controller to route the requests to the corresponding service running on the cluster. An ingress controller is usually a load balancer like Nginx that reads the configuration about which host maps to which app from ingress resources you place in the cluster.

Here’s a more or less full breakdown of what happens:

- We push some code to pull request #1234

- Our CI checks out the code and deploys it to our Kubernetes cluster (we used kubecfg, helm templates were just too hard to maintain 😭)

- Our CI also interacts with the GitHub Deployments API so we have nice feedback on the progress of the deployment and finally links to our review app from the pull request page.

- We click on the View Deployment link, or type in an address such as

web-pr-1234.example.com - Our browser resolves the IP address, which points to our ELB

- Our browser makes a request to our ELB, with the host header being

web-pr-1234.example.com. - The ELB forwards that request to the ingress controller inside the Kubernetes cluster (see below), which finds the ingress resource that matches our host header, and forwards the request onto the corresponding kubernetes service, which in turn (!) forwards the request onto one the service’s running pods.

- We’re using SSL, so the browser will check that the wild card certificate we used (

*.example.com) matches the domain entered in the address bar (web-pr-1234.example.com). - The browser renders some HTML and we’re good to go.

It would have been nicer to have a domain like web.pr-1234.example.com, but unfortunately you can only have wild card domains and certificates at one level, such as *.example.com, not *.*.example.com. It’s possible of course that we could have automated the creation of new certificates and DNS entries for each of our pull requests but this seemed a bit excessive..!

With this basic idea in mind we got to work putting all this together. This article shows some of the working out we did while making the routing and load balancing work, as well as a problem we bumped into as more and more applications were deployed onto the system, the famous max pod limit problem. We do this with commands that you can use to do it yourself.

Routing and Load Balancing

There are three-ish different ways EKS can interact with load balancers on AWS. They differ largely in what or who creates and configures the load balancers: kubernetes or yourself (i.e. with CloudFormation). I’ll go through each option briefly here.

(Dan Maas has written up the first two of these already in his article on Setting up Amazon EKS)

-

A LoadBalancer service

apiVersion: v1 kind: Service metadata: name: app-service spec: type: LoadBalancer selector: app: app ports: - port: 80 targetPort: 80Simply creating a Kubernetes service of type LoadBalancer will create a new ELB in the same VPC. The advantage is that it’s a pretty simple way of getting a public address for an app inside the Kubernetes cluster. The disadvantage is that you won’t have much more control over the routing. You can’t, for example, have one ELB routing to multiple backends based on hostname or path.

Another disadvantage here is that while EKS will create the ELB automatically for you, you will likely want to setup a DNS entry for it, HTTPS certificates and perhaps even CloudFront. At which point you’ll be referring back to CloudFormation or manual processes.

You’ll also need to make sure your subnets have the correct tags for this to work, see: https://docs.aws.amazon.com/eks/latest/userguide/load-balancing.html.

-

The ALB Ingress Controller

As people are increasingly using the ingress features of Kubernetes over the LoadBalancer service, a native ingress controller for AWS seems to make a lot of sense. Documentation for this can be found here: https://github.com/kubernetes-sigs/aws-alb-ingress-controller.

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: app namespace: app annotations: kubernetes.io/ingress.class: alb alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/tags: Environment=dev,Team=test spec: rules: - host: app.example.com http: paths: - path: / backend: serviceName: app-service servicePort: 80The idea here is that a single ingress resource will create an AWS ALB (application load balancer) to give you an public entrypoint, allowing you to do host-based or path-based routing. The slight issue here is that if there are two ingress resources, two ALBs will be created! This is slightly different to the way other ingress controllers work in that there is often just one entrypoint, configured with multiple ingress resources. In short, if you want to manage just one load balancer (with corresponding DNS, certs, etc) for all incoming traffic, your applications will be forced to merge their configuration into a single ingress resource. Which is far from ideal.

Again, like the LoadBalancer service above, the ALB ingress controller will create the ALB automatically for you. It can also setup DNS using Route 53 for you too, see here. Setup of other more advanced things like certificates and CloudFront seem to be out of scope so you’ll likely be doing this manually or with CloudFormation templates.

Like the LoadBalancer service above, make sure your subnets have the correct tags for this to work, see: https://docs.aws.amazon.com/eks/latest/userguide/load-balancing.html.

-

Regular ELB + NodePort service + Ingress

The third option is much more traditional AWS setup. We have an ELB that points to a number of nodes in the Kubernetes cluster on a particular port (we’re going to use 30080, in the default allowed range of 30000-32767 for a NodePort). We then deploy a regular ingress-nginx setup onto the cluster, but with a service of type NodePort. All traffic will be directed to the ingress-nginx pods which are configured by the one or more ingress resources required by your deployments.

The advantage of this approach is that you have a single ELB that you can setup with CloudFormation alongside other elements such as HTTPS certificates, DNS, CloudFront, etc. You could even have two or more ELBs pointing to the same cluster, depending on DNS and certificate requirements.

We’re going to show how you can setup your cluster routing with this last approach.

Creating The Cluster

As hinted at above, there are indeed plenty of ways you can create an EKS cluster and its surrounding infrastructure. We’re going use eksctl to demonstrate in this article, but for production we opted for a single CloudFormation template that setup all the infrastructure (ELBs, HTTPS certificates, CloudFront, DNS, etc) and meant that we could execute the template on each master deployment to keep the infrastructure in line with it’s configuration.

eksctl is a good tool, but it’s quite imperative whereas for GitOps you really want something that’s declarative. Imperative means you issue individual commands to change the cluster, declarative means you simply change the configuration, execute it, and the system will determine what needs to be changed or updated. CloudFormation and indeed Kubernetes are both great examples of declarative systems. eksctl have some GitOps support in the works though, something to wait for.

As we’re using eksctl, we’re going to create our cluster in a few steps:

- Create the VPC, the subnets, and the EKS cluster using

eksctl. - Create the ELBs and related elements using CloudFormation using outputs from step 1

- Finally create the node group using outputs from step 2. A node group is eksctls word for, well, a group of nodes in a cluster, each node having the same instance type and AMI. You can have multiple node groups in a cluster.

(Using CloudFormation you can put all this into a single CloudFormation template but that’s probably for another time.)

Anyway, enough chat. Let’s create a cluster:

eksctl create cluster \

--name ReviewApps \

--region us-west-2 \

--without-nodegroupBehind the scenes eksctl will create a CloudFormation stack (called eksctl-ReviewApps-cluster) with the elements required to run the EKS cluster, things like the VPC, private and public subnets, internet gateways, etc. We don’t create a node group yet, as we’ll see we need to connect the EC2 instances in the node group to the ELB infrastructure.

Next we’ll create our ELB. Here’s the CloudFormation template:

AWSTemplateFormatVersion: '2010-09-09'

Description: Review Apps Infrastructure

Parameters:

Vpc:

Type: String

Description: The VPC to place this ELB into.

PublicSubnets:

Type: CommaDelimitedList

Description: A comma-delimited list of public subnets in which to run the ELBs

Resources:

WorkerNodeTargetGroup:

Type: AWS::ElasticLoadBalancingV2::TargetGroup

Properties:

HealthCheckPath: /healthz

HealthCheckPort: "30080"

Protocol: HTTP

Port: 30080

Name:

!Sub "WorkerTargetGroup"

VpcId: !Ref Vpc

LoadBalancerListenerHttp:

Type: AWS::ElasticLoadBalancingV2::Listener

Properties:

DefaultActions:

- Type: forward

TargetGroupArn:

Ref: WorkerNodeTargetGroup

LoadBalancerArn:

Ref: LoadBalancer

Port: 80

Protocol: HTTP

LoadBalancer:

Type: AWS::ElasticLoadBalancingV2::LoadBalancer

Properties:

SecurityGroups:

- !Ref ElbSecurityGroup

Subnets: !Ref PublicSubnets

ElbSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Incoming Access To HTTP

VpcId: !Ref Vpc

Tags:

- Key: Name

Value:

Fn::Join:

- ''

- - Ref: AWS::StackName

- "ElbSecurityGroup"

ElbSecurityGroupEgress:

Type: AWS::EC2::SecurityGroupEgress

Properties:

GroupId:

Ref: ElbSecurityGroup

IpProtocol: "-1"

FromPort: 0

ToPort: 65535

CidrIp: 0.0.0.0/0

ElbSecurityGroupIngressHttp:

Type: AWS::EC2::SecurityGroupIngress

Properties:

GroupId:

Ref: ElbSecurityGroup

IpProtocol: tcp

FromPort: 80

ToPort: 80

CidrIp: 0.0.0.0/0

NodeSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Incoming HTTP for Kubelet

VpcId: !Ref Vpc

Tags:

- Key: Name

Value:

Fn::Join:

- ''

- - Ref: AWS::StackName

- "NodeSecurityGroup"

NodeSecurityGroupEgress:

Type: AWS::EC2::SecurityGroupEgress

Properties:

GroupId:

Ref: NodeSecurityGroup

IpProtocol: "-1"

FromPort: 0

ToPort: 65535

CidrIp: 0.0.0.0/0

NodeSecurityGroupIngressHttp:

Type: AWS::EC2::SecurityGroupIngress

Properties:

GroupId:

Ref: NodeSecurityGroup

IpProtocol: tcp

FromPort: 30080

ToPort: 30080

SourceSecurityGroupId: !Ref ElbSecurityGroup

Outputs:

NodeSecurityGroup:

Description: Node Security Group

Value: !Ref NodeSecurityGroup

ElbDomainName:

Description: Load Balancer Domain Name

Value: !GetAtt LoadBalancer.DNSName

WorkerNodeTargetGroup:

Description: Worker Node Target Group

Value: !Ref WorkerNodeTargetGroupWe’re going to use a tool called cfn, which you can download using Node/NPM: npm i -g cfn.

We need to get some outputs from the eksctl-ReviewApps-cluster stack, namely SubnetsPublic and VPC.

cfn deploy ReviewAppsLoadBalancer elb.yaml \

--Vpc $(cfn output eksctl-ReviewApps-cluster VPC) \

--PublicSubnets $(cfn output eksctl-ReviewApps-cluster SubnetsPublic)You can get the outputs of the stack with this:

eks $ cfn outputs ReviewAppsLoadBalancer | json

{

"ElbDomainName": "Revie-LoadB-1FBHYHW4171RT-141325971.us-west-2.elb.amazonaws.com",

"NodeSecurityGroup": "sg-0e131391e2ef1f980",

"WorkerNodeTargetGroup": "arn:aws:elasticloadbalancing:us-west-2:596351669136:targetgroup/WorkerTargetGroup/34920be01bd9d6e7"

}From these we get the target group ARNs and the node security group and enter them into this file:

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: ReviewApps

region: us-west-2

nodeGroups:

- name: ng-1

instanceType: t3.medium

desiredCapacity: 3

securityGroups:

withShared: true

withLocal: true

attachIDs: ['...'] # node security group here

targetGroupARNs: ['...'] # target group ARN here

maxPodsPerNode: 110That last parameter maxPodsPerNode we’ll describe in more detail below.

And run

eksctl create nodegroup -f cluster.yamlYou should see some active nodes in the cluster now:

eks $ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-192-168-14-252.us-west-2.compute.internal Ready <none> 47s v1.13.8-eks-cd3eb0

ip-192-168-57-164.us-west-2.compute.internal Ready <none> 49s v1.13.8-eks-cd3eb0

ip-192-168-94-132.us-west-2.compute.internal Ready <none> 51s v1.13.8-eks-cd3eb0Setting Up Ingress + ELB

Next we’ll install Ingress-Nginx. We take their “mandatory” resource set from this page: https://kubernetes.github.io/ingress-nginx/deploy/

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yamlThen we’ll make a NodePort service for it so it’s listening on port 30080 on each of our nodes in the cluster:

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30080

protocol: TCP

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginxkubectl apply -f ingress-nginx-node-port.yamlThe ELB can take a little while to mark the instances as healthy again (by default 5 successful checks at 30s each = something like 3 minutes.)

Once they’ve started up, you should be able to check that it’s working. (We get the domain name of the ELB from the CloudFormation outputs above.)

eks $ curl Revie-LoadB-1FBHYHW4171RT-141325971.us-west-2.elb.amazonaws.com/healthz -v

* Trying 52.43.72.37...

* TCP_NODELAY set

* Connected to Revie-LoadB-1FBHYHW4171RT-141325971.us-west-2.elb.amazonaws.com (52.43.72.37) port 80 (#0)

> GET /healthz HTTP/1.1

> Host: Revie-LoadB-1FBHYHW4171RT-141325971.us-west-2.elb.amazonaws.com

> User-Agent: curl/7.54.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Date: Mon, 09 Sep 2019 13:08:45 GMT

< Content-Type: text/html

< Content-Length: 0

< Connection: keep-alive

< Server: openresty/1.15.8.1If that’s not working, here are some things you’ll need to check when getting an ELB to talk to nodes in a Kubernetes cluster:

- Make sure your security groups are setup correctly. The nodes must be able to accept traffic (specifically in our case port 30080 over TCP) from the security group the ELB is running on.

- Make sure your nodes are in the subnets included in your Target Group.

- Make sure your nodes respond to the health checks configured in the Target Group. Ingress-nginx will respond with a 200 OK to a

GET /healthz.

Now we can start demonstrating some apps running behind our ingress load balancer. Put this into backend.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend

spec:

replicas: 3

selector:

matchLabels:

app: backend

template:

metadata:

labels:

app: backend

spec:

containers:

- name: backend

image: refractalize/proxy-backend

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: backend

spec:

selector:

app: backend

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: backend

spec:

rules:

- host: app.example.com

http:

paths:

- path: /

backend:

serviceName: backend

servicePort: 80Then:

kubectl apply -f backend.yamlYou should now be seeing this:

eks $ curl Revie-LoadB-1FBHYHW4171RT-141325971.us-west-2.elb.amazonaws.com -H 'Host: app.example.com'

{

"host": "backend-5f99c56b5d-cjx26",

"url": "/",

"method": "GET",

"headers": {

"host": "app.example.com",

"x-request-id": "2d5143f6b46c0334447ac6e26d9c8f42",

"x-real-ip": "10.46.0.0",

"x-forwarded-for": "10.46.0.0",

"x-forwarded-host": "app.example.com",

"x-forwarded-port": "80",

"x-forwarded-proto": "http",

"x-original-uri": "/",

"x-scheme": "http",

"x-original-forwarded-for": "80.245.25.53",

"x-amzn-trace-id": "Root=1-5d76509a-19d0f1249e754dbf9d05751c",

"user-agent": "curl/7.54.0",

"accept": "*/*"

}

}Notice how we’re passing -H "Host: app.example.com" to curl? The routing we setup for the Ingress looks at the Host header on the request and directs it to the correct kubernetes service and pods. Remember when you type in “app.example.com” into your browser, the browser does two or three different things with that domain name:

- It resolves the IP address using DNS

- It makes a HTTP request to that IP address with the domain in the host header, e.g.

Host: app.example.com - Finally, in the case of HTTPS, it ensures that the certificate presented in the response matches the hostname entered. The certificate could be a wildcard certificate, in which case it just needs to match app.example.com with *.example.com.

Ingress controllers can route based on the path and protocol too.

EKS Max Pods

You will likely find that you can only run a limited number of pods on each node in the cluster… This, believe it or not, is a feature.

Let’s scale our backend to some high number, like 70. Across 3 medium nodes this should be quite reasonable.

kubectl scale --replicas 70 deploy/backendYou’ll find that some of our pods aren’t starting, they’re stuck in one of two states Pending or ContainerCreating. You can see the error in more detail by running kubectl describe pod/podname:

- Those that are

Pendingwill have an error message:0/3 nodes are available: 3 Insufficient pods, 3 node(s) were unschedulable(this implies that we’re hitting the kubelet’s max pod limit.) - Those that are

ContainerCreatingwill have an error message:NetworkPlugin cni failed to set up pod "podname" network: add cmd: failed to assign an IP address to container(this implies that we’re running out of available IP addresses on the node, or in the subnet.)

This is where the max pods setting comes in. Each kubelet (each node, essentially) has what is known as a pod limit. By default this is 110 pods. In EKS it’s much less because of the default AWS CNI installed on each node. A CNI (or network plugin) tells the kubelet how pods should interact with the network interfaces on the node, and handles things like allocating new IP addresses for your pods.

The difference with the AWS CNI plugin, is that it allocates real IP addresses on the subnet. This makes your pods accessible from outside the cluster, from anywhere inside your VPN - which might sound useful, but actually since there’s no corresponding DNS entries, and since your pods are likely to be recycled with the usual ebb and flow of deployments it’s unlikely many people will want to be connecting directly with your pods, far more likely you’ll be using some form of ingress controller to do this.

Also far more likely is that people wonder why the can only run some limited number of pods on each node. The limit because there’s only a small number of network interfaces available on each EC2 instance. This number depends on the instance type, a full list of these limits can be found here.

To fix all of this, we need to do two things:

- Replace the default CNI with weave-net.

- Reset (or unset) the default pod limit in the kubelets.

We’ll start by replacing the CNI, first delete the AWS CNI:

kubectl delete ds aws-node -n kube-systemAnd install weave-net:

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"We’ve already used the maxPodsPerNode setting above on the node group with eksctl. If you’re using CloudFormation to create your cluster and nodes, you can add the following user-data to your LaunchConfiguration or LaunchTemplate, it should look something like this:

WorkerNodeLaunchConfig:

Type: AWS::AutoScaling::LaunchConfiguration

Properties:

...

UserData:

Fn::Base64:

!Sub |

#!/bin/bash

set -o xtrace

/etc/eks/bootstrap.sh --use-max-pods false ${EksCluster}

/opt/aws/bin/cfn-signal --exit-code $? \

--stack ${AWS::StackName} \

--resource NodeGroup \

--region ${AWS::Region}Note the --use-max-pods argument to the bootstrap.sh. By default the bootstrap will start the kubelet with the limit for the instance type, by passing --use-max-pods we ignore this limit and use the kubelet’s default limit, which is normally 110 pods per node. More information on how bootstrap.sh works here, and what cfn-signal is here.

You’ll very likely have to reboot all the nodes in the cluster to make both of these changes a reality. The eksctl approach for this would be simply to scale the nodes to zero, and back up to original number.

eksctl scale nodegroup --name ng-1 --cluster ReviewApps --nodes 0

eksctl scale nodegroup --name ng-2 --cluster ReviewApps --nodes 3Now you’re much more likely to be able to use your EC2 instances to their full capacity and save a few dollars in the process.

Wrapup

In summary, there are different ways to setup the EKS cluster, we’ve used eksctl here, but CloudFormation is also a great option. We’ve setup a more traditional ELB configuration using a NodePort service for ingress-nginx. Finally, we’ve removed the limit on the number of pods per node imposed by AWS’s default CNI.

It’s always a good idea to get some decent logging and monitoring on your infrastructure too. I won’t go into that here, but we used DataDog on our project and made sure that each of our pods were annotated in such a way that we could easily identify logs coming from a single instance of a system (with mulitple services), a single service (with multiple pods) or indeed just a single pod.

The rest of our work in getting our review apps working involved some automation of build servers, deploying builds on new pushes, and finally cleaning up review apps when the pull requests were closed.

In all, our review apps (and their infrastructure) have been very successful. Our review apps are used by members of our team, of course for manual testing, but also by many of the people and teams we interact with, whether that’s to discuss how features work, for acceptance testing with clients and stake holders or just to see what other team members are up to.